Do you want to contribute by writing guest posts on this blog?

Please contact us and send us a resume of previous articles that you have written.

Decoding the Secrets: Interpreting Machine Learning Models

Machine Learning models have taken the world by storm in recent years. With their ability to process vast amounts of data and make accurate predictions, they have revolutionized various industries, including finance, healthcare, and marketing. However, one challenge many researchers face is understanding how these models actually work and interpreting their decisions. In this article, we will delve into the complexities of interpreting Machine Learning models and shed light on their inner workings.

The Black Box Conundrum

Machine Learning models, such as deep neural networks, are often characterized as black boxes. They take inputs, apply complex mathematical operations, and produce outputs without revealing the decision-making process. This lack of transparency can be daunting when attempting to gain insights into why a model made a particular prediction.

Interpretability has become a critical area of research in Machine Learning, as it offers numerous advantages. By understanding the inner workings of models, we can trust the decisions they make and identify potential biases or errors.

4.5 out of 5

| Language | : | English |

| File size | : | 19537 KB |

| Text-to-Speech | : | Enabled |

| Screen Reader | : | Supported |

| Enhanced typesetting | : | Enabled |

| Print length | : | 448 pages |

Inherent Interpretability vs. Post-hoc Interpretability

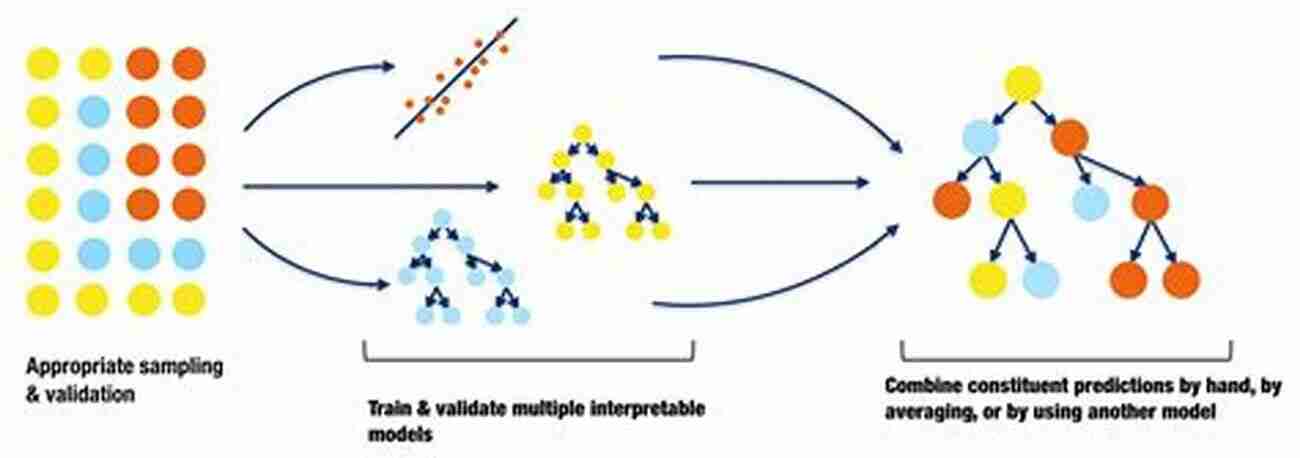

Interpretability in Machine Learning can be achieved through two main approaches: inherent interpretability and post-hoc interpretability.

Inherent interpretability refers to models that are transparent by design. These models, such as linear regression or decision trees, provide clear insights into their decision-making process. However, they often lack the complexity to handle intricate datasets and may sacrifice accuracy in certain cases.

On the other hand, post-hoc interpretability focuses on understanding complex models that are not inherently interpretable. Techniques like feature importance, saliency maps, and partial dependence plots can provide valuable insights into why a model made specific predictions. These techniques allow us to identify the key features and patterns that influenced the model’s decision.

Explaining Predictions with LIME

One popular tool for interpreting Machine Learning models is LIME (Local Interpretable Model-Agnostic Explanations). LIME uses a local surrogate model to explain the predictions made by a complex model. By generating interpretable explanations for individual predictions, LIME offers insights into feature importance and highlights what influenced the model's decision.

For example, in a healthcare context, LIME can help interpret why a particular patient was classified as having a higher risk of developing a certain disease. By analyzing the important features identified by LIME, healthcare professionals can better understand the factors contributing to the prediction and make informed decisions.

Interpreting Deep Neural Networks

While deep neural networks are often considered difficult to interpret, research in interpretability has made significant progress in understanding their decision-making process. Techniques such as Grad-CAM, which highlights the important regions of an image that influenced the model's prediction, have proven valuable in interpreting convolutional neural networks.

Furthermore, various research initiatives aim to increase the transparency of deep learning models by developing methods to extract relevant information from hidden layers. These methods, including Layer-wise Relevance Propagation (LRP),provide valuable insights into the inner workings of deep neural networks and help interpret their decisions.

Challenges and Future Directions

While significant progress has been made in interpreting Machine Learning models, challenges still exist. One common challenge is the tradeoff between accuracy and interpretability. More interpretable models may sacrifice prediction accuracy, especially in complex tasks.

Additionally, as models become increasingly complex and data sets grow in size, understanding their decisions becomes more challenging. Researchers continue to explore methods to effectively interpret these models without sacrificing their accuracy or increasing computational costs.

, interpreting Machine Learning models is an ongoing research area vital to gaining insights into their decision-making process. Techniques like LIME and Grad-CAM offer valuable ways to interpret complex models, while inherent interpretability provides transparency in simpler models. As the field continues to evolve, the future holds promising advancements in enhancing the interpretability of Machine Learning models, making them even more valuable in various industries.

4.5 out of 5

| Language | : | English |

| File size | : | 19537 KB |

| Text-to-Speech | : | Enabled |

| Screen Reader | : | Supported |

| Enhanced typesetting | : | Enabled |

| Print length | : | 448 pages |

Understand model interpretability methods and apply the most suitable one for your machine learning project. This book details the concepts of machine learning interpretability along with different types of explainability algorithms.

You’ll begin by reviewing the theoretical aspects of machine learning interpretability. In the first few sections you’ll learn what interpretability is, what the common properties of interpretability methods are, the general taxonomy for classifying methods into different sections, and how the methods should be assessed in terms of human factors and technical requirements. Using a holistic approach featuring detailed examples, this book also includes quotes from actual business leaders and technical experts to showcase how real life users perceive interpretability and its related methods, goals, stages, and properties.

Progressing through the book, you’ll dive deep into the technical details of the interpretability domain. Starting off with the general frameworks of different types of methods, you’ll use a data set to see how each method generates output with actual code and implementations. These methods are divided into different types based on their explanation frameworks, with some common categories listed as feature importance based methods, rule based methods, saliency maps methods, counterfactuals, and concept attribution. The book concludes by showing how data effects interpretability and some of the pitfalls prevalent when using explainability methods.

What You’ll Learn

- Understand machine learning model interpretability

- Explore the different properties and selection requirements of various interpretability methods

- Review the different types of interpretability methods used in real life by technical experts

- Interpret the output of various methods and understand the underlying problems

Who This Book Is For

Machine learning practitioners, data scientists and statisticians interested in making machine learning models interpretable and explainable; academic students pursuing courses of data science and business analytics.

Grayson Bell

Grayson BellWellington's Incredible Military and Political Journey: A...

When it comes to military and political...

Kenzaburō Ōe

Kenzaburō Ōe10 Mind-Blowing Events That Take Place In Space

Welcome to the fascinating world of...

Joseph Conrad

Joseph ConradThe Astonishing Beauty of Lanes Alexandra Kui: Exploring...

When it comes to capturing the essence of...

Arthur C. Clarke

Arthur C. ClarkeUnlock the Secrets of Riding with a Twist Of The Wrist

Are you a motorcycle...

Clay Powell

Clay PowellThe Ultimate Guide to An Epic Adventure: Our Enchanting...

Are you ready for a truly mesmerizing and...

Ashton Reed

Ashton ReedThe Last Great Revolution: A Transformation That Shaped...

Throughout history, numerous revolutions have...

Julio Cortázar

Julio CortázarThe Cinder Eyed Cats: Uncovering the Mysteries of Eric...

Have you ever come across a book that takes...

Theodore Mitchell

Theodore MitchellDiscover the Ultimate Spiritual Solution to Human...

In today's fast-paced, modern...

Tony Carter

Tony CarterContract Law Made Easy Vol.: A Comprehensive Guide for...

Are you confused about the intricacies of...

Jackson Blair

Jackson BlairThe Wright Pages Butterbump Lane Kids Adventures: An...

In the magical world of...

Reginald Cox

Reginald CoxAmerica Nightmare Unfolding In Afghanistan

For more than two decades,...

Sidney Cox

Sidney CoxCivil Rights Leader Black Americans Of Achievement

When it comes to the civil...

Light bulbAdvertise smarter! Our strategic ad space ensures maximum exposure. Reserve your spot today!

Jermaine PowellThe Bohol We Love: An Anthology Of Memoirs That Will Leave You Yearning for...

Jermaine PowellThe Bohol We Love: An Anthology Of Memoirs That Will Leave You Yearning for...

Jesus MitchellLearn How to Sign Third Grade Sight Words Using The American Manual Alphabet...

Jesus MitchellLearn How to Sign Third Grade Sight Words Using The American Manual Alphabet...

Dalton FosterStep By Step Guide To Drawing Realistic Animals: How To Draw For Kids And...

Dalton FosterStep By Step Guide To Drawing Realistic Animals: How To Draw For Kids And... Peter CarterFollow ·17.5k

Peter CarterFollow ·17.5k Nathan ReedFollow ·18.8k

Nathan ReedFollow ·18.8k Terence NelsonFollow ·11.5k

Terence NelsonFollow ·11.5k Stanley BellFollow ·9.6k

Stanley BellFollow ·9.6k Haruki MurakamiFollow ·17.1k

Haruki MurakamiFollow ·17.1k Liam WardFollow ·10.2k

Liam WardFollow ·10.2k Marc FosterFollow ·7.3k

Marc FosterFollow ·7.3k Jayson PowellFollow ·19.4k

Jayson PowellFollow ·19.4k